Delta Lake stores table data as a series of data files, but it also stores a lot of other information.

Which of the following is stored alongside data files when using Delta Lake?

Correct Answer:

C

Delta Lake stores table data as a series of data files in a specified location, but it also stores table metadata in a transaction log. The table metadata includes the schema, partitioning information, table properties, and other configuration details. The table metadata is stored alongside the data files and is updated atomically with every write operation. The table metadata can be accessed using the DESCRIBE DETAIL command or the DeltaTable class in Scala, Python, or Java. The table metadata can also be enriched with custom tags or user-defined commit messages using the TBLPROPERTIES or

userMetadata options. References:

✑ Enrich Delta Lake tables with custom metadata

✑ Delta Lake Table metadata - Stack Overflow

✑ Metadata - The Internals of Delta Lake

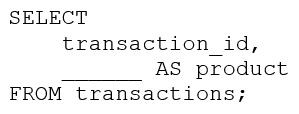

A data engineer is working with a nested array columnproductsin tabletransactions. They want to expand the table so each unique item inproductsfor each row has its own row where thetransaction_idcolumn is duplicated as necessary.

They are using the following incomplete command:

Which of the following lines of code can they use to fill in the blank in the above code block so that it successfully completes the task?

Correct Answer:

B

The explode function is used to transform a DataFrame column of arrays or maps into multiple rows, duplicating the other column??s values. In this context, it will be used to expand the nested array column products in the transactions table so that each unique item in products for each row has its own row and the transaction_id column is duplicated as necessary. References: Databricks Documentation

I also noticed that you sent me an image along with your message. The image shows a snippet of SQL code that is incomplete. It begins with ??SELECT?? indicating a query to retrieve data. ??transaction_id,?? suggests that transaction_id is one of the columns being selected. There are blanks indicated by underscores where certain parts of the SQL command should be, including what appears to be an alias for a column and part of the FROM clause. The query ends with ??FROM transactions;?? indicating data is being selected from a ??transactions?? table.

If you are interested in learning more about Databricks Data Analyst Associate certification, you can check out the following resources:

✑ Databricks Certified Data Analyst Associate: This is the official page for the certification exam, where you can find the exam guide, registration details, and preparation tips.

✑ Data Analysis With Databricks SQL: This is a self-paced course that covers the topics and skills required for the certification exam. You can access it for free on Databricks Academy.

✑ Tips for the Databricks Certified Data Analyst Associate Certification: This is a blog post that provides some useful advice and study tips for passing the certification exam.

✑ Databricks Certified Data Analyst Associate Certification: This is another blog post that gives an overview of the certification exam and its benefits.

In which of the following situations will the mean value and median value of variable be meaningfully different?

Correct Answer:

E

The mean value of a variable is the average of all the values in a data set, calculated by dividing the sum of the values by the number of values. The median value of a variable is the middle value of the ordered data set, or the average of the middle two values if the data set has an even number of values. The mean value is sensitive to outliers, which are values that are verydifferent from the rest of the data. Outliers can skew the mean value and make it less representative of the central tendency of the data. The median value is more robust to outliers, as it only depends on the middle values of the

data. Therefore, when the variable contains a lot of extreme outliers, the mean value and the median value will be meaningfully different, as the mean value will be pulled towards the outliers, while the median value will remain close to the majority of the data1. References: Difference Between Mean and Median in Statistics (With Example) - BYJU??S

A data analyst has been asked to configure an alert for a query that returns the income in the accounts_receivable table for a date range. The date range is configurable using a Date query parameter.

The Alert does not work.

Which of the following describes why the Alert does not work?

Correct Answer:

D

According to the Databricks documentation1, queries that use query parameters cannot be used with Alerts. This is because Alerts do not support user input or dynamic values. Alerts leverage queries with parameters using the default value specified in the SQL editor for each parameter. Therefore, if the query uses a Date query parameter, the alert will always use the same date range as the default value, regardless of the actual date. This may cause the alert to not work as expected, or to not trigger at all. References:

✑ Databricks SQL alerts: This is the official documentation for Databricks SQL alerts,

where you can find information about how to create, configure, and monitor alerts, as well as the limitations and best practices for using alerts.

A data team has been given a series of projects by a consultant that need to be implemented in the Databricks Lakehouse Platform.

Which of the following projects should be completed in Databricks SQL?

Correct Answer:

C

Databricks SQL is a service that allows users to query data in the lakehouse using SQL and create visualizations and dashboards1. One of the common use cases for Databricks SQL is to combine data from different sources and formats into a single, comprehensive dataset that can be used for further analysis or reporting2. For example, a data analyst can use Databricks SQL to join data from a CSV file and a Parquet file, or from a Delta table and a JDBC table, and create a new table or view that contains the combined data3. This can help simplify the data management and governance, as well as improve the data quality and consistency. References:

✑ Databricks SQL overview

✑ Databricks SQL use cases

✑ Joining data sources