- (Exam Topic 3)

You have five Power Bl reports that contain R script data sources and R visuals.

You need to publish the reports to the Power Bl service and configure a daily refresh of datasets. What should you include in the solution?

Correct Answer:

D

To schedule refresh of your R visuals or dataset, enable scheduled refresh and install an on-premises data gateway (personal mode) on the computer containing the workbook and R.

Reference: https://docs.microsoft.com/en-us/power-bi/connect-data/desktop-r-in-query-editor

- (Exam Topic 3)

You have a deployment pipeline for a Power BI workspace. The workspace contains two datasets that use import storage mode.

A database administrator reports a drastic increase in the number of queries sent from the Power BI service to an Azure SQL database since the creation of the deployment pipeline.

An investigation into the issue identifies the following: One of the datasets is larger than 1 GB and has a fact table that contains more than 500 million rows.

One of the datasets is larger than 1 GB and has a fact table that contains more than 500 million rows. When publishing dataset changes to development, test, or production pipelines, a refresh is triggered against the entire dataset.

When publishing dataset changes to development, test, or production pipelines, a refresh is triggered against the entire dataset.

You need to recommend a solution to reduce the size of the queries sent to the database when the dataset changes are published to development, test, or production.

What should you recommend?

Correct Answer:

B

A composite model in Power BI means part of your model can be a DirectQuery connection to a data source (for example, SQL Server database), and another part as Import Data (for example, an Excel file). Previously, when you used DirectQuery, you couldn’t even add another data source into the model.

DirectQuery and Import Data have different advantages.

Now the Composite Model combines the good things of both Import and DirectQuery into one model. Using the Composite Model, you can work with big data tables using DirectQuery, and still import smaller tables using Import Data.

Reference:

https://radacad.com/composite-model-directquery-and-import-data-combined-evolution-begins-in-power-bi

https://powerbi.microsoft.com/en-us/blog/five-new-power-bi-premium-capacity-settings-is-available-on-the-por

- (Exam Topic 3)

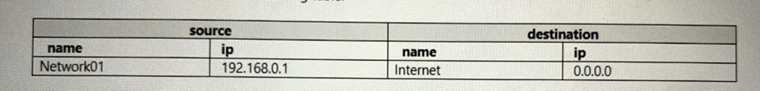

You are using an Azure Synapse Analytics serverless SQL pool to query network traffic logs in the Apache Parquet format. A sample of the data is shown in the following table.

You need to create a Transact-SQL query that will return the source IP address.

Which function should you use in the select statement to retrieve the source IP address?

Correct Answer:

A

- (Exam Topic 3)

You are using a Python notebook in an Apache Spark pool in Azure Synapse Analytics. You need to present the data distribution statistics from a DataFrame in a tabular view. Which method should you invoke on the DataFrame?

Correct Answer:

B

pandas.DataFrame.corr computes pairwise correlation of columns, excluding NA/null values. Incorrect:

* freqItems pyspark.sql.DataFrame.freqItems

Finding frequent items for columns, possibly with false positives. Using the frequent element count algorithm described in https://doi.org/10.1145/762471.762473, proposed by Karp, Schenker, and Papadimitriou.'

* summary is used for index.

* There is no panda method for rollup. Rollup would not be correct anyway. Reference: https://pandas.pydata.org/docs/reference/api/pandas.DataFrame.corr.html

- (Exam Topic 3)

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

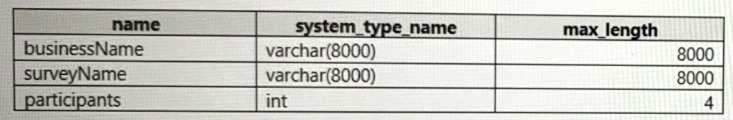

You are using an Azure Synapse Analytics serverless SQL pool to query a collection of Apache Parquet files by using automatic schema inference. The files contain more than 40 million rows of UTF-8-encoded business names, survey names, and participant counts. The database is configured to use the default collation.

The queries use open row set and infer the schema shown in the following table.

You need to recommend changes to the queries to reduce I/O reads and tempdb usage.

Solution: You recommend defining a data source and view for the Parquet files. You recommend updating the query to use the view.

Does this meet the goal?

Correct Answer:

B

Solution: You recommend using OPENROWSET WITH to explicitly specify the maximum length for businessName and surveyName.

The size of the varchar(8000) columns are too big. Better reduce their size.

A SELECT...FROM OPENROWSET(BULK...) statement queries the data in a file directly, without importing the data into a table. SELECT...FROM OPENROWSET(BULK...) statements can also list bulk-column aliases by using a format file to specify column names, and also data types.

Reference: https://docs.microsoft.com/en-us/sql/t-sql/functions/openrowset-transact-sql