- (Exam Topic 3)

You are performing feature engineering on a dataset.

You must add a feature named CityName and populate the column value with the text London.

You need to add the new feature to the dataset.

Which Azure Machine Learning Studio module should you use?

Correct Answer:

A

Typical metadata changes might include marking columns as features. References:

https://docs.microsoft.com/en-us/azure/machine-learning/studio-module-reference/edit-metadata

- (Exam Topic 3)

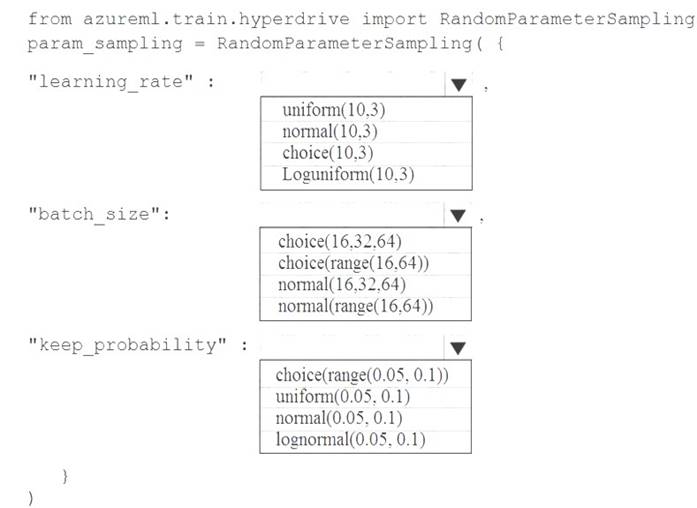

You are using the Azure Machine Learning Service to automate hyperparameter exploration of your neural network classification model.

You must define the hyperparameter space to automatically tune hyperparameters using random sampling according to following requirements: The learning rate must be selected from a normal distribution with a mean value of 10 and a standard deviation of 3.

The learning rate must be selected from a normal distribution with a mean value of 10 and a standard deviation of 3. Batch size must be 16, 32 and 64.

Batch size must be 16, 32 and 64. Keep probability must be a value selected from a uniform distribution between the range of 0.05 and 0.1.

Keep probability must be a value selected from a uniform distribution between the range of 0.05 and 0.1.

You need to use the param_sampling method of the Python API for the Azure Machine Learning Service. How should you complete the code segment? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Solution:

In random sampling, hyperparameter values are randomly selected from the defined search space. Random sampling allows the search space to include both discrete and continuous hyperparameters.

Example:

from azureml.train.hyperdrive import RandomParameterSampling param_sampling = RandomParameterSampling( { "learning_rate": normal(10, 3),

"keep_probability": uniform(0.05, 0.1),

"batch_size": choice(16, 32, 64)

}

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/service/how-to-tune-hyperparameters

Does this meet the goal?

Correct Answer:

A

- (Exam Topic 3)

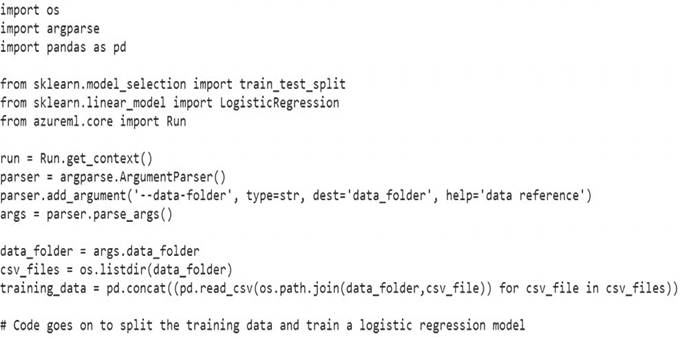

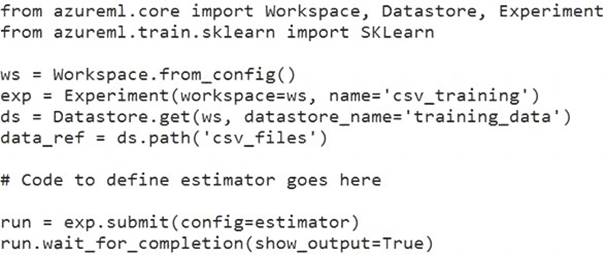

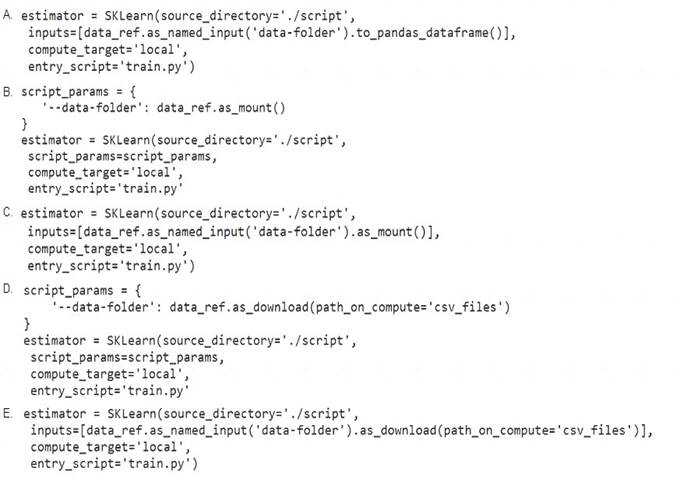

You create a datastore named training_data that references a blob container in an Azure Storage account. The blob container contains a folder named csv_files in which multiple comma-separated values (CSV) files are stored.

You have a script named train.py in a local folder named ./script that you plan to run as an experiment using an estimator. The script includes the following code to read data from the csv_files folder:

You have the following script.

You need to configure the estimator for the experiment so that the script can read the data from a data reference named data_ref that references the csv_files folder in the training_data datastore.

Which code should you use to configure the estimator?

Correct Answer:

B

Besides passing the dataset through the inputs parameter in the estimator, you can also pass the dataset through script_params and get the data path (mounting point) in your training script via arguments. This way, you can keep your training script independent of azureml-sdk. In other words, you will be able use the same training script for local debugging and remote training on any cloud platform.

Example:

from azureml.train.sklearn import SKLearn script_params = {

# mount the dataset on the remote compute and pass the mounted path as an argument to the training script '--data-folder': mnist_ds.as_named_input('mnist').as_mount(),

'--regularization': 0.5

}

est = SKLearn(source_directory=script_folder, script_params=script_params, compute_target=compute_target, environment_definition=env, entry_script='train_mnist.py')

# Run the experiment

run = experiment.submit(est) run.wait_for_completion(show_output=True) Reference:

https://docs.microsoft.com/es-es/azure/machine-learning/how-to-train-with-datasets

- (Exam Topic 3)

You have several machine learning models registered in an Azure Machine Learning workspace. You must use the Fairlearn dashboard to assess fairness in a selected model.

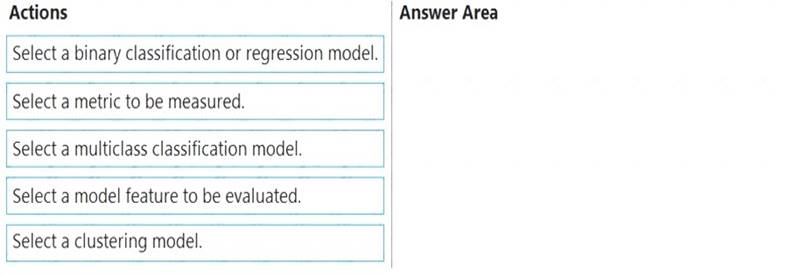

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Solution:

Graphical user interface, text, application Description automatically generated

Step 1: Select a model feature to be evaluated.

Step 2: Select a binary classification or regression model.

Register your models within Azure Machine Learning. For convenience, store the results in a dictionary,

which maps the id of the registered model (a string in name:version format) to the predictor itself. Example:

model_dict = {}

lr_reg_id = register_model("fairness_logistic_regression", lr_predictor) model_dict[lr_reg_id] = lr_predictor

svm_reg_id = register_model("fairness_svm", svm_predictor) model_dict[svm_reg_id] = svm_predictor

Step 3: Select a metric to be measured Precompute fairness metrics.

Create a dashboard dictionary using Fairlearn's metrics package. Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-machine-learning-fairness-aml

Does this meet the goal?

Correct Answer:

A

- (Exam Topic 3)

You are analyzing a dataset containing historical data from a local taxi company. You arc developing a regression a regression model.

You must predict the fare of a taxi trip.

You need to select performance metrics to correctly evaluate the- regression model. Which two metrics can you use? Each correct answer presents a complete solution. NOTE: Each correct selection is worth one point.

Correct Answer:

BE

References:

https://docs.microsoft.com/en-us/azure/machine-learning/studio-module-reference/evaluate-model