Exhibit:

Task:

A Dockerfile has been prepared at -/human-stork/build/Dockerfile

Solution:

Solution:

Does this meet the goal?

Correct Answer:

A

Exhibit:

Task:

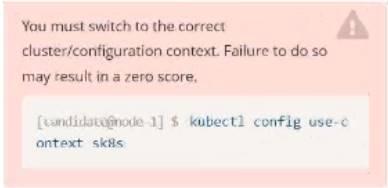

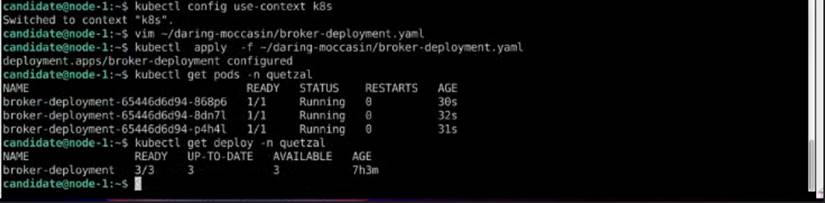

Modify the existing Deployment named broker-deployment running in namespace quetzal so that its containers.

The broker-deployment is manifest file can be found at:

Solution:

Solution:

Text Description automatically generated

Does this meet the goal?

Correct Answer:

A

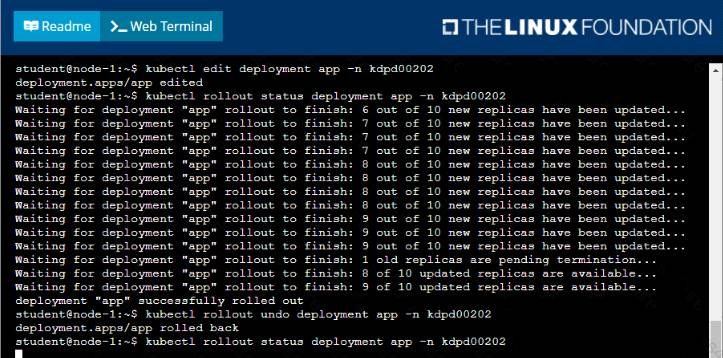

Exhibit:

Context

As a Kubernetes application developer you will often find yourself needing to update a running application. Task

Please complete the following:

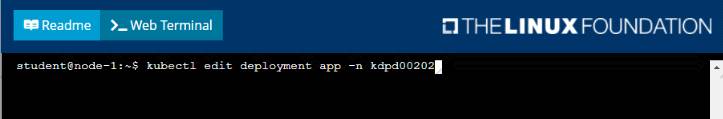

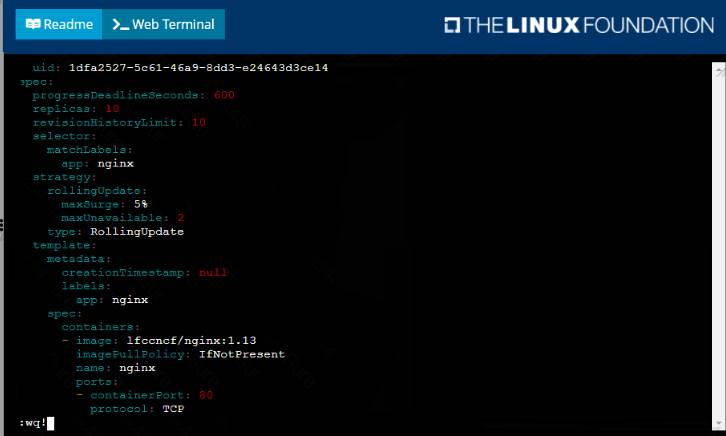

• Update the app deployment in the kdpd00202 namespace with a maxSurge of 5% and a maxUnavailable of 2%

• Perform a rolling update of the web1 deployment, changing the Ifccncf/ngmx image version to 1.13

• Roll back the app deployment to the previous version

Solution:

Solution:

Does this meet the goal?

Correct Answer:

A

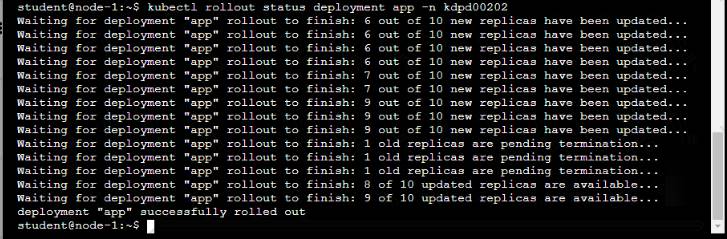

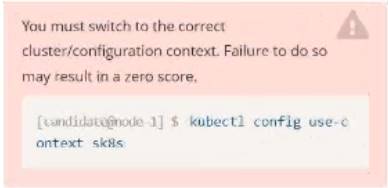

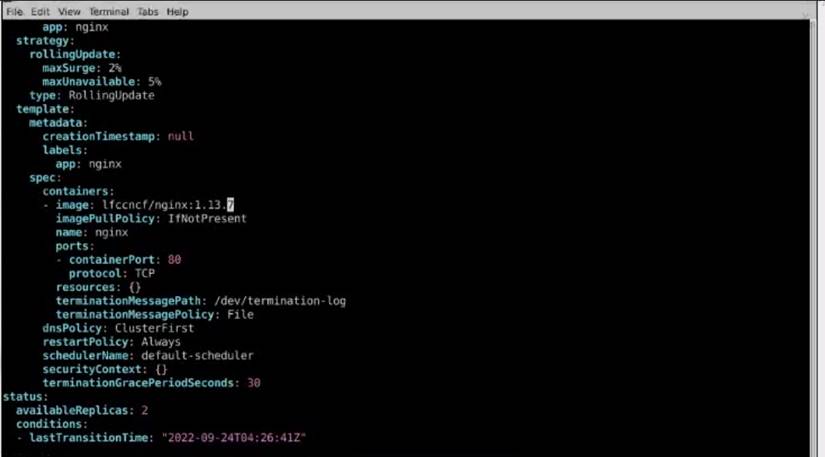

Exhibit:

Task:

1- Update the Propertunel scaling configuration of the Deployment web1 in the ckad00015 namespace setting maxSurge to 2 and maxUnavailable to 59

2- Update the web1 Deployment to use version tag 1.13.7 for the Ifconf/nginx container image. 3- Perform a rollback of the web1 Deployment to its previous version

Solution:

Solution:

Text Description automatically generated

Does this meet the goal?

Correct Answer:

A

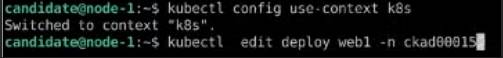

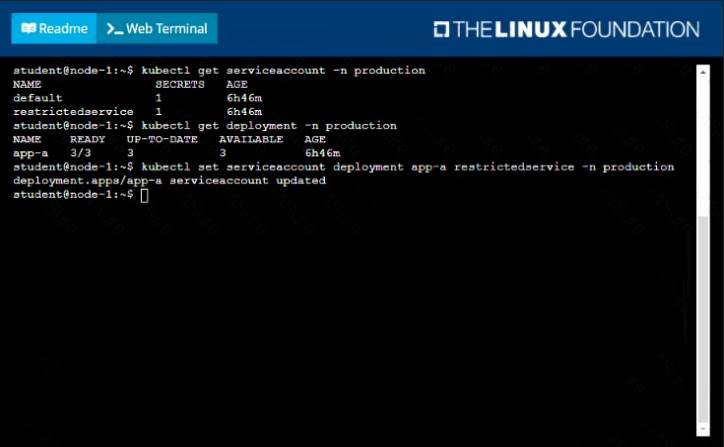

Exhibit:

Context

Your application’s namespace requires a specific service account to be used.

Task

Update the app-a deployment in the production namespace to run as the restrictedservice service account. The service account has already been created.

Solution:

Solution:

Does this meet the goal?

Correct Answer:

A