CORRECT TEXT

List all the pods showing name and namespace with a json path expression

Solution:

kubectl get pods -o=jsonpath="{.items[*]['metadata.name',

'metadata.namespace']}"

Does this meet the goal?

Correct Answer:

A

CORRECT TEXT

Get list of all pods in all namespaces and write it to file “/opt/pods-list.yaml”

Solution:

kubectl get po –all-namespaces > /opt/pods-list.yaml

Does this meet the goal?

Correct Answer:

A

CORRECT TEXT

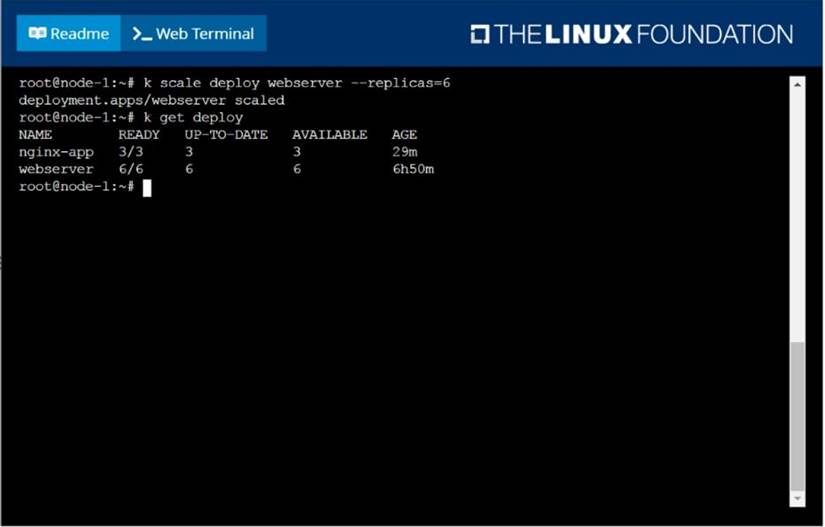

Scale the deployment webserver to 6 pods.

Solution:

solution

F:WorkData Entry WorkData Entry20200827CKA14 B.JPG

Does this meet the goal?

Correct Answer:

A

CORRECT TEXT

Score: 4%

Task

Scale the deployment presentation to 6 pods.

Solution:

Solution:

kubectl get deployment

kubectl scale deployment.apps/presentation --replicas=6

Does this meet the goal?

Correct Answer:

A

CORRECT TEXT

For this item, you will have to ssh to the nodes ik8s-master-0 and ik8s-node-0 and complete all tasks on these nodes. Ensure that you return to the base node (hostname: node-1) when you have completed this item.

Context

As an administrator of a small development team, you have been asked to set up a Kubernetes cluster to test the viability of a new application.

Task

You must use kubeadm to perform this task. Any kubeadm invocations will require the use of the --ignore-preflight-errors=all option.

✑ Configure the node ik8s-master-O as a master node. .

✑ Join the node ik8s-node-o to the cluster.

Solution:

solution

You must use the kubeadm configuration file located at /etc/kubeadm.conf when initializingyour cluster.

You may use any CNI plugin to complete this task, but if you don't have your favourite CNI plugin's manifest URL at hand, Calico is one popular option:

https://docs.projectcalico.org/v3.14/manifests/calico.yaml

Docker is already installed on both nodes and apt has been configured so that you can install the required tools.

Does this meet the goal?

Correct Answer:

A