CORRECT TEXT

Score: 13%

Task

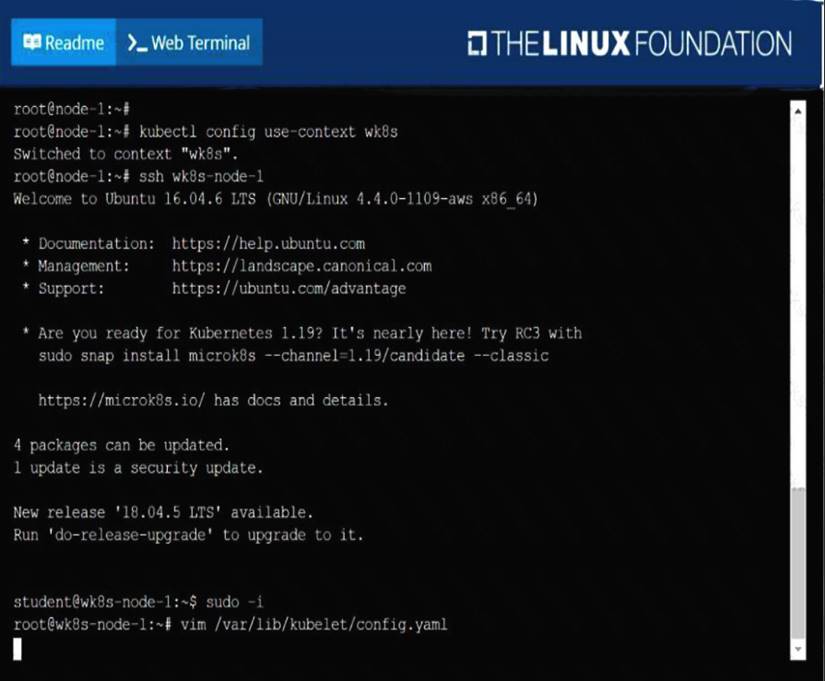

A Kubernetes worker node, named wk8s-node-0 is in state NotReady. Investigate why this is the case, and perform any appropriate steps to bring the node to a Ready state, ensuring that any changes are made permanent.

Solution:

Solution:

sudo -i

systemctl status kubelet

systemctl start kubelet

systemctl enable kubelet

Does this meet the goal?

Correct Answer:

A

CORRECT TEXT

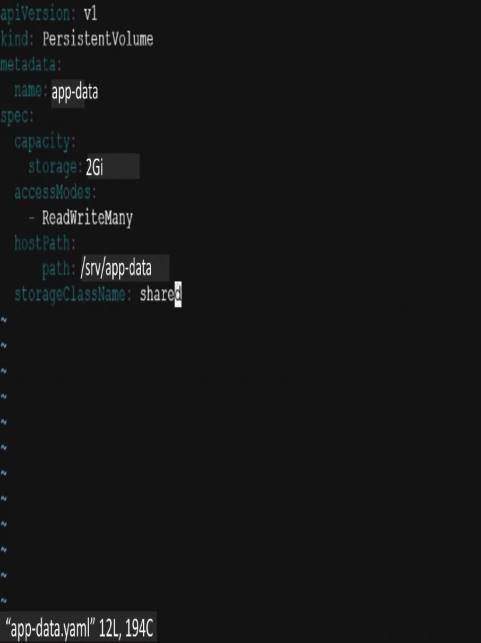

Create a persistent volume with name app-data, of capacity 2Gi and access mode ReadWriteMany. The type of volume is hostPath and its location is /srv/app-data.

Solution:

solution

Persistent Volume

A persistent volume is a piece of storage in a Kubernetes cluster. PersistentVolumes are a cluster-level resource like nodes, which don’t belong to any namespace. It is provisioned by the administrator and has a particular file size. This way, a developer deploying their app on Kubernetes need not know the underlying infrastructure. When the developer needs a certain amount of persistent storage for their application, the system administrator configures the cluster so that they consume the PersistentVolume provisioned in an easy way.

Creating Persistent Volume

kind: PersistentVolumeapiVersion: v1metadata: name:app-dataspec: capacity: # defines the capacity of PV we are creating storage: 2Gi #the amount of storage we are tying to claim accessModes: # defines the rights of the volume we are creating - ReadWriteMany hostPath: path: "/srv/app-data" # path to which we are creating the volume

Challenge

✑ Create a Persistent Volume named app-data, with access mode ReadWriteMany, storage classname shared, 2Gi of storage capacity and the host path /srv/app- data.

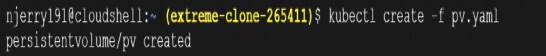

* 2. Save the file and create the persistent volume.

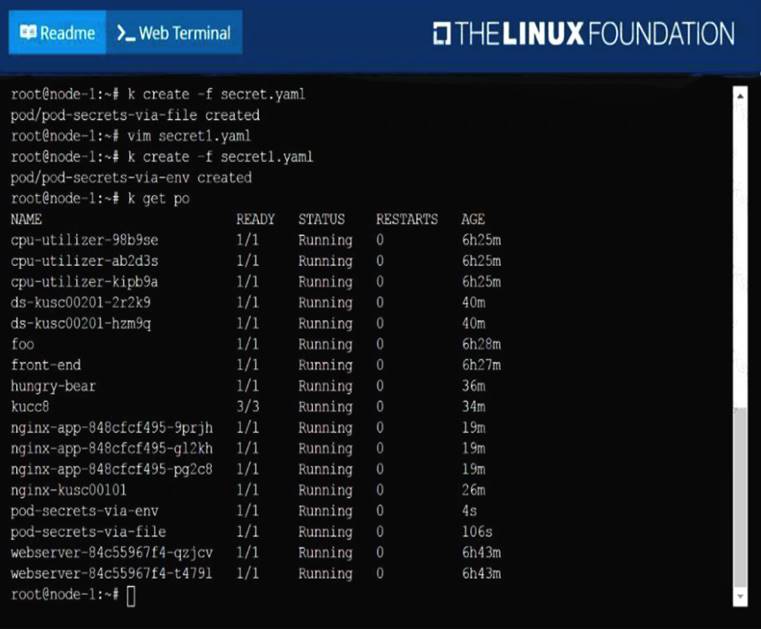

Image for post

* 3. View the persistent volume.

✑ Our persistent volume status is available meaning it is available and it has not been mounted yet. This status will change when we mount the persistentVolume to a persistentVolumeClaim.

PersistentVolumeClaim

In a real ecosystem, a system admin will create the PersistentVolume then a developer will create a PersistentVolumeClaim which will be referenced in a pod. A PersistentVolumeClaim is created by specifying the minimum size and the access mode they require from the persistentVolume. Challenge

✑ Create a Persistent Volume Claim that requests the Persistent Volume we had created above. The claim should request 2Gi. Ensure that the Persistent Volume Claim has the same storageClassName as the persistentVolume you had previously created.

kind: PersistentVolumeapiVersion: v1metadata: name:app-data

spec:

accessModes: - ReadWriteMany resources:

requests: storage: 2Gi

storageClassName: shared

* 2. Save and create the pvc

njerry191@cloudshell:~ (extreme-clone-2654111)$ kubect1 create -f app-data.yaml persistentvolumeclaim/app-data created

* 3. View the pvc

Image for post

* 4. Let’s see what has changed in the pv we had initially created.

Image for post

Our status has now changed from available to bound.

* 5. Create a new pod named myapp with image nginx that will be used to Mount the Persistent Volume Claim with the path /var/app/config.

Mounting a Claim

apiVersion: v1kind: Podmetadata: creationTimestamp: null name: app-dataspec: volumes: - name:congigpvc persistenVolumeClaim: claimName: app-data containers: - image: nginx name: app volumeMounts: - mountPath: "/srv/app-data " name: configpvc

Does this meet the goal?

Correct Answer:

A

CORRECT TEXT

Create a pod with image nginx called nginx and allow traffic on port 80

Solution:

kubectl run nginx --image=nginx --restart=Never --port=80

Does this meet the goal?

Correct Answer:

A

CORRECT TEXT

List the nginx pod with custom columns POD_NAME and POD_STATUS

Solution:

kubectl get po -o=custom-columns="POD_NAME:.metadata.name, POD_STATUS:.status.containerStatuses[].state"

Does this meet the goal?

Correct Answer:

A

CORRECT TEXT

Score: 4%

Task

Set the node named ek8s-node-1 as unavailable and reschedule all the pods running on it.

Solution:

SOLUTION:

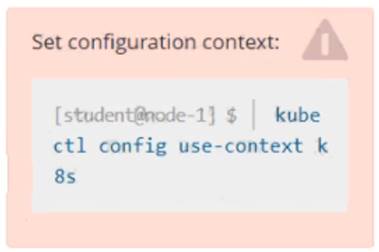

[student@node-1] > ssh ek8s

kubectl cordon ek8s-node-1

kubectl drain ek8s-node-1 --delete-local-data --ignore-daemonsets --force

Does this meet the goal?

Correct Answer:

A